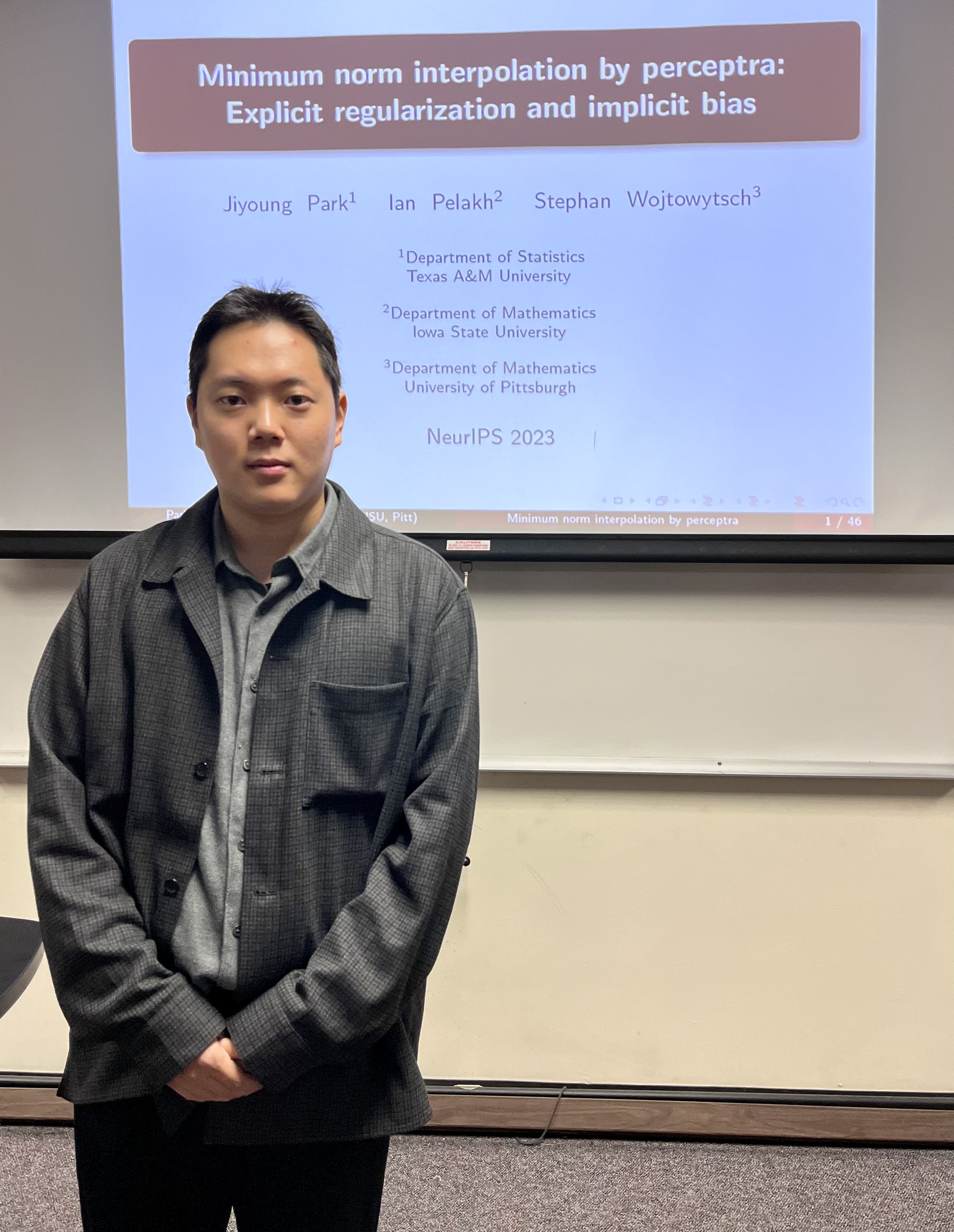

Stat Cafe - Jiyoung Park

- Time: Wednesday 11/15/2023 from 11:30 AM to 12:20 PM

- Location: BLOC 448

- Pizza and drinks provided

Topic

Minimum Norm Interpolation by Two-Layer ReLU Neural Networks

Abstract

Despite their empirical success, theoretical explanations for neural networks’ effectiveness remain elusive. One question is how well a neural network fits data and how it interpolates the region without data. We investigate this problem in the case of Two-Layer ReLU neural networks. Our analysis verifies that weight decay penalized empirical risk minimizers converge to a minimum norm interpolant with respect to the dataset size when the strength of the regularizer and the number of parameters are scaled appropriately. As an application of our theoretical findings, we show our results can be leveraged to explore the implicit bias inherent in common optimization algorithms. The talk will primarily introduce the essential theoretical components needed to analyze the Two-Layer ReLU neural network and convergence results.

Presentation

Recording

Gallery