Stat Cafe - Isaac Ray

GGBoost - Graph Gradient Boosting

- Time: Monday 2/5/2024 from 11:40 AM to 12:30 PM

- Location: BLOC 448

- Pizza and drinks provided

Description

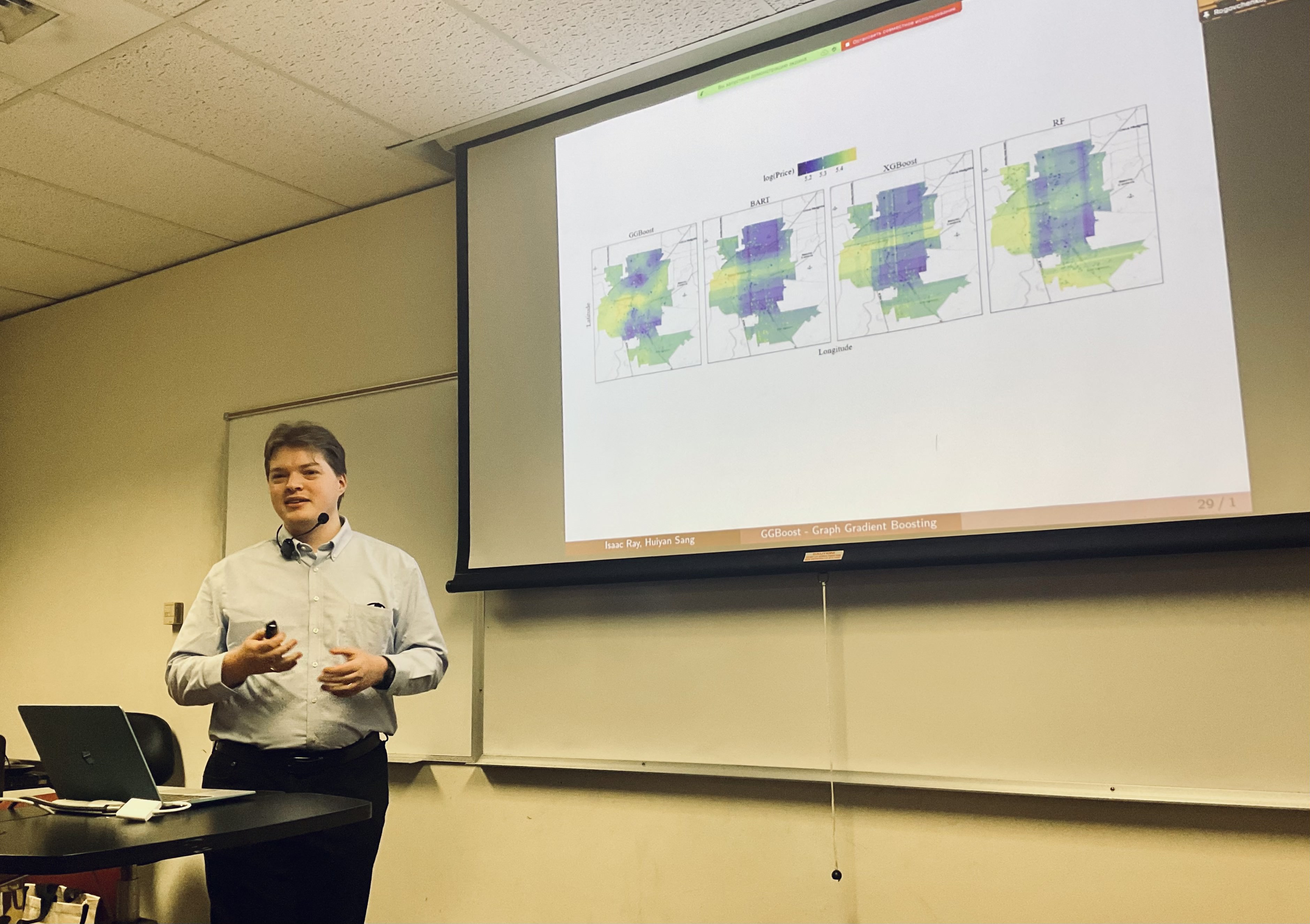

Gradient-boosted decision trees (GBDTs) such as XGBoost and LightGBM have become extremely popular due to their exceptional performance on tabular data for machine learning tasks such as regression and classification. We present a novel graph-split-based gradient boosting trees method, called GGBoost, which improves the performance of GBDTs on complex data with graph relations. The method uses a highly flexible split rule that is compliant with graph structure, thereby relaxing the axis-parallel split assumption found in most existing GBDTs. We describe a scalable greedy search algorithm that is based on recursive updates of gradients on arborescences to construct the graph-split-based decision tree.

Presentation

Recording

Gallery